5 Steps for Landing Page A/B Testing on Meta Ads

Optimize your Meta Ads with A/B testing for landing pages. Learn to improve conversions and scale your ad performance effectively.

Want better results from your Meta Ads? Start with A/B testing your landing pages. This method compares two page variations to see which drives more conversions, improving ROAS and lowering CPL.

Here’s a quick summary of the 5 steps to master landing page A/B testing:

Set up tests correctly: Choose the right campaign objectives (e.g., Leads or Sales), allocate budgets wisely (e.g., $10/day per variation), and use tools like Meta's A/B Testing feature or AdAmigo.ai for automation.

Pick what to test: Focus on impactful elements like headlines, CTAs, images, or forms. Test one variable at a time to pinpoint what works.

Run and monitor tests: Launch variations simultaneously, track key metrics (CTR, CPC, ROAS), and wait for statistical significance before making decisions.

Analyze results: Use metrics like conversion rate and uplift to identify winners while avoiding common mistakes like cutting tests too early or altering parameters mid-test.

Scale winning results: Gradually increase budgets, expand audiences, and continuously test new ideas for ongoing improvement.

Key takeaway: A/B testing helps you make data-driven decisions to improve conversions and ad performance. Ready to start? Focus on one high-impact element and let the data guide you.

A/B test MULTIPLE landing pages on Facebook Ads (2022 Tutorial)

Step 1: Set Up Your Meta Ad Campaign for A/B Testing

Getting started with A/B testing on Meta Ads Manager requires a solid foundation to ensure accurate data and efficient use of your budget. Here’s how to set up your campaign for success and identify the elements you want to test.

Choose the Right Campaign and Objective

The first step is selecting an ad objective that matches your primary conversion goal. This decision helps Meta’s algorithm focus on the outcomes you care about most. Ask yourself, What action do I want my audience to take? For instance:

If you want more people visiting your landing page, go with Traffic.

Need form submissions? Choose Leads.

Looking to boost sales? Opt for Sales.

Here’s a quick reference table to help you match objectives to common business goals:

Simplified Ad Objective | Business Goal |

|---|---|

Awareness | Reach a large audience to build recognition and recall for your business. |

Traffic | Direct users to a specific destination like your website, app, or Facebook Page. |

Engagement | Find users likely to interact with your ad or business online. |

Leads | Gather contact details or signups through forms, messages, or calls. |

App Promotion | Encourage app installs or specific actions within your app. |

Sales | Target users likely to buy your products or services. |

For landing page A/B testing, Leads and Sales are often the most effective objectives since they focus on conversions after users click. As your business evolves, remember to revisit your campaign goals - what works for brand awareness might not align with lead generation or sales later on.

Set Your Budget for Reliable Results

Allocating your budget strategically is key to gathering meaningful data without overspending. To maintain control over spending, set your budget at the ad set level rather than the campaign level. A good starting point is at least $10 per day per variation. For instance, if you’re testing two landing pages, you’d need a daily budget of at least $20.

Run your test for a minimum of 7 days to collect enough data for statistical significance. Once you start seeing positive results, increase your budget gradually - by about 10–15% per day - to scale effectively without disrupting performance.

This approach is especially crucial for smaller budgets, where every dollar counts. Andrea Vahl, a Marketing Consultant and Author, emphasizes:

"In order to set up a proper split test, you need to have your budget set up at the ad set level, not campaign budget organisation."

Interestingly, research reveals that about one in eight experiments leads to noticeable improvements, and a well-executed split test can boost ROI by up to 10×.

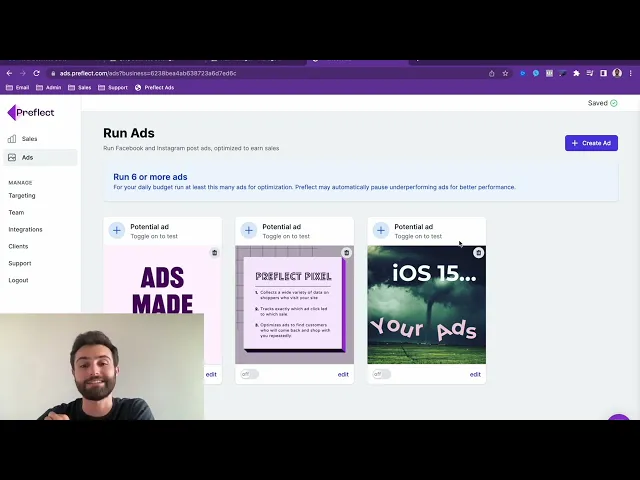

Use Tools to Simplify Setup

Meta Ads Manager comes with built-in tools that make A/B testing easier. Its A/B Testing and Experiments features allow you to create and monitor test variations directly within the platform. For example, you can initiate an A/B test from the toolbar or during the campaign setup process.

One standout advantage of using Meta's tools is their ability to automatically calculate statistical significance, so you’ll know exactly when you’ve gathered enough data. If you prefer manual setup, you can duplicate campaigns, ad sets, or ads, but be prepared for more hands-on monitoring.

For even greater efficiency, tools like AdAmigo.ai can automate much of the process. This platform uses AI to analyze your ad account and optimize campaigns for better outcomes. Its bulk ad launching tool lets you deploy hundreds of ads with a single click, making it ideal for running multiple A/B tests at once. This is particularly helpful for those new to Meta ads, as it simplifies the process while delivering results comparable to those achieved by top agencies.

When setting up your tests, make sure to change only one variable at a time. This allows you to clearly identify which element is driving the results. From here, you can decide which landing page components to test next to further improve your campaign.

Step 2: Choose Which Landing Page Elements to Test

With your campaign set up, the next step is identifying which parts of your landing page could most influence conversions. The key is to focus on elements that directly impact visitor behavior and drive results.

High-Impact Elements to Test

Your headline is one of the most powerful tools for grabbing attention. It’s the first thing visitors notice and can make or break their decision to stay. A strong, clear headline can boost conversion rates by as much as 300%. For example, award-winning author Amanda Stevens conducted an A/B test for her eBook landing page. She replaced the headline "New Book Reveals Rescue Remedies for Retailers" with "If you're a retailer in need of fresh ideas and proven growth strategies, this book is for you!" The revised headline resonated more with her audience, leading to a significant increase in conversions.

Calls to action (CTAs) are another critical component. These are the final step before a conversion happens, so they need to stand out. Personalized CTAs perform 202% better than generic ones. Use action-focused language and contrasting colors to make your buttons pop. For instance, instead of a generic "Submit", try something more engaging like "Get My Free Guide" or "Start My Trial."

Hero images and visuals can shape first impressions - 94% of which are design-related. Picking the wrong image can turn visitors away before they even engage with your content. Leadpages customer Jae Jun from Old School Value tested two images for his stock-analysis software. The modern-looking image outperformed the traditional one by an impressive 99.76%.

Lead forms also play a pivotal role in conversions. Simplicity is key - ask only for the information you truly need. Each additional field can lower your conversion rate. In one test, a page featuring a progress bar achieved a 28% higher conversion rate than the control version. Small landing page fixes like this can make a big difference.

When it comes to copy, focus on benefits rather than features. Visitors want to know how your offer solves their problems. Keep your messaging concise, persuasive, and tailored to their needs.

Secondary Elements to Consider

Once you’ve optimized the primary components, you can refine supporting details to push performance further. Experiment with color schemes for CTAs and backgrounds, or add trust signals like testimonials or security badges. For instance, showing security badges can increase conversions by up to 42%.

Page layout and structure also influence user experience. If visitors aren’t scrolling past the fold, consider testing a more engaging opening section to grab their attention.

Don’t overlook mobile optimization. With mobile devices accounting for about 54.8% of global web traffic, ensure your page loads quickly and is easy to navigate. Even a 1-second delay can reduce conversions by 7%. Test different layouts, button sizes, and form designs specifically for mobile users.

Follow Meta's Testing Guidelines

Meta emphasizes testing one variable at a time to get clear, actionable results. According to the Meta Business Help Center:

"You'll have more conclusive results for your test if your ad sets are identical except for the variable that you're testing".

Start with a measurable hypothesis - what specific outcome do you expect from a change, and why does it matter? This approach ensures your tests are purposeful and likely to improve performance.

Also, keep Meta’s advertising policies in mind. Facebook strictly reviews and may reject ads or landing pages that don’t comply with its guidelines. This is particularly important when testing headlines, images, or copy that could trigger a review.

Finally, prioritize elements with the highest potential impact. Research shows that ad images drive 75%-90% of ad performance. Peter Koechley, co-founder of Upworthy, found that headline testing alone could lead to a 500% difference in results. These insights can help you decide where to focus your efforts for the biggest payoff.

With these strategies in mind, you’re ready to create and test your variations in the next step.

Step 3: Run and Manage Your A/B Test

Setting up and managing your A/B test effectively is crucial to gathering reliable data that can lead to actionable improvements. Here’s how to create, monitor, and even automate your testing process for better results.

Create Your Test Variations

Meta’s Ads Manager offers two primary ways to set up A/B tests. You can either duplicate an existing campaign and tweak a single variable or compare two campaigns that already differ in the element you want to test.

To begin, select "A/B Test" from the toolbar in Ads Manager. Duplicate your campaign and adjust one variable - like the landing page URL - while keeping everything else identical. This ensures your test focuses on the impact of that one change.

Budget allocation plays a big role in ensuring fair results. Assign the same budget to each variation to avoid skewed data. Uneven spending can make it harder to pinpoint which variation truly performs better. For landing page tests, use unique URLs with distinct UTM parameters (e.g., "LP-Test-Headline-A" and "LP-Test-Headline-B") to track performance accurately.

Monitor Test Progress

Before launching your test, double-check that budgets, tracking, audiences, and creative assets are set up properly. Launch all test variations at the same time to guarantee equal exposure and avoid timing biases.

Once live, track key metrics daily, such as click-through rate (CTR), cost per click (CPC), conversion rate, and return on ad spend (ROAS). Customizing columns in Ads Manager to focus on metrics tied to your goals can help you spot trends early. However, resist the urge to optimize too soon - wait until your test reaches statistical significance before making any changes. Premature adjustments can compromise your results.

Meta’s Experiments tool can help you determine when your test has gathered enough data to draw meaningful conclusions. Keep an eye out for external factors that might affect performance, like seasonal trends, competitor activity, or major events. If you notice unusual spikes or drops, dig deeper to understand what’s driving them.

The duration of your test also matters. Running it long enough ensures the data you collect is reliable and free from short-term fluctuations.

Use AI Tools for Automation

Managing multiple A/B tests manually can be time-consuming, but AI tools like AdAmigo.ai make it easier. This platform automates test setups and provides real-time optimization suggestions, helping you streamline the process.

AdAmigo.ai’s AI agent reviews your ad account performance daily, offering actionable recommendations to improve results. You can choose to let the system operate on autopilot or manually review and approve each suggestion, giving you both control and efficiency.

"AI recommendations are spot-on... It's like having an extra set of super-smart hands helping me hit my KPIs." – Sherwin S., G2 review

One standout feature is the ability to launch hundreds of ad variations with a single click. This bulk-launching capability simplifies testing multiple elements across different campaigns, saving you hours of manual setup while maintaining consistent parameters.

AdAmigo.ai also handles automated monitoring and reporting. Instead of spending time manually checking performance, you’ll receive regular updates and optimization recommendations. For agencies managing multiple client accounts, this feature is a game-changer. It allows you to scale A/B testing across accounts without increasing your workload. The AI flags important insights that require attention, so you can focus on strategic decisions.

As a Meta Business Technology Partner, AdAmigo.ai integrates seamlessly with your existing Meta ad setup, ensuring all automated optimizations align with Meta’s guidelines.

With your test running smoothly and tools in place to monitor progress, you’re ready to dive into analyzing the results and uncovering actionable insights in the next step.

Step 4: Analyze Your A/B Test Results

Once your test reaches statistical significance, it's time to dive into the data. The goal? Pinpoint which variation performed better and understand how it impacts your business objectives.

Key Metrics to Track

The metrics you focus on should directly connect to your campaign goals. Some of the most important ones include Click-Through Rate (CTR), Conversion Rate, Cost Per Click (CPC), Cost Per Acquisition (CPA), and Return on Ad Spend (ROAS).

For example, in November 2024, the median CTR for Facebook Ads was 1.77%, while December 2024 saw a median Cost Per Lead of $41.26. Engagement metrics like likes, shares, and comments can also provide valuable insights into how well your content is connecting with your audience. However, their importance depends on your campaign type. Engagement rates are more relevant for awareness campaigns, while conversion metrics are critical for lead generation efforts. To ensure accuracy, you must track custom conversions that align with your specific business goals.

You can also learn a lot by analyzing demographic data, including age and gender, to uncover patterns that can improve your targeting. For instance, a Shopify store selling custom jewelry discovered through Meta's pixel tracking that most of their sales came from mobile users. By optimizing their campaigns for mobile, they saw a noticeable increase in conversion rates.

Another useful metric is Uplift, which measures percentage differences. For example, if your control group has a cost of $4 per user and a variation costs $5, the uplift is 25%. Additionally, Probability to Be Best can help predict which variation is likely to perform better over time, making it a valuable metric for identifying a winner.

With these metrics in hand, it’s crucial to avoid common mistakes that could lead to inaccurate conclusions.

Avoid Common Testing Mistakes

Always aim for 95% statistical significance before declaring a winner. This standard ensures that if you repeated the experiment 20 times, you'd get consistent results 19 of those times.

"Misinterpreting an experiment is worse than not running it at all." – Jon Noronha

Don’t cut your test short. Factors like seasonality or competitor activity can temporarily skew results, so patience is key.

Also, watch out for Simpson's paradox. This occurs when overall results mask differences in specific segments. For instance, Variation B might outperform the Control overall (20% vs. 10% conversion rate), but a closer look might reveal that the Control performs better on desktop (20% vs. 5%), while Variation B dominates on mobile (30% vs. 10%). Breaking down results by demographics or devices can uncover these hidden insights.

Never alter test parameters mid-flight. Adjusting budgets, audience settings, or creative elements during a test compromises the integrity of your data. If changes are necessary, start a new test instead.

Lastly, avoid testing too many variations at once. Doing so increases the risk of false positives and makes it harder to manage and analyze results effectively.

Apply Results to Future Campaigns

Once you’ve reviewed your results and addressed any errors, it’s time to put your findings to work.

Document each test thoroughly using a standardized format to capture your hypotheses, variations, outcomes, and lessons learned. This kind of documentation builds a knowledge base that helps your team avoid repeating mistakes.

Instead of making sweeping changes, implement updates gradually. A/B tests answer specific questions; for example, if a new headline performs well, try similar messaging on a few other landing pages before rolling it out site-wide.

Take time to understand why the winning variation worked. For instance, the Bannersnack team noticed users were ignoring their timeline feature because the button wasn’t noticeable. By simply increasing its size, they boosted adoption by 12%.

Consider the bigger picture, too. One company selling stem cell therapy found through session replay analysis that their checkout page wasn’t the issue - it was a lack of compelling product information. Adding case studies and videos led to a 10% boost in conversions.

Finally, use your insights to refine audience segments and craft more targeted messaging. Build a testing pipeline where each completed test feeds into the next, creating a continuous cycle of improvement. This iterative approach strengthens your A/B testing strategy and drives better results over time.

Step 5: Implement and Scale Your Winning Results

When you’ve identified a winning variation, roll it out gradually. While Meta's algorithm automatically allocates more budget to better-performing ads, implementing updates slowly ensures that results remain consistent across diverse audiences and conditions.

Test changes incrementally to confirm long-term performance. For scaling, adjust budgets carefully - raising them by about 20% every 24–48 hours helps maintain stability. If you need to scale faster, try duplicating successful ad sets with larger budgets rather than making drastic increases to existing campaigns. Keep in mind that Meta may spend up to 75% more than your daily budget on some days to capitalize on better opportunities, but weekly spending will stay within seven times your daily budget. Use cost controls to keep performance consistent as spending fluctuates.

Once updates are live, closely monitor performance and continue refining your campaigns through ongoing testing.

Create a Continuous Testing Process

Developing a consistent testing cycle is key to staying ahead of shifts in user behavior and platform algorithms. Regularly generate and test new hypotheses to refine your strategies. This could involve experimenting with different messaging, platforms, or content variations, and then tweaking campaigns based on performance insights.

Fine-tune your audience segmentation by analyzing how users respond to tested elements. Build systems that dynamically adjust content based on user preferences identified through A/B testing. Collaboration across teams is crucial - share insights widely to ensure everyone benefits from the data.

As with earlier stages, document each change to create a roadmap for continuous improvement.

Scale Your Campaigns

Armed with tested insights, scaling becomes a more predictable process. There are three main ways to scale successful ads:

Increase budgets for ad sets that are already profitable.

Duplicate ad sets to explore new objectives, such as driving website traffic or boosting engagement.

Expand your audience by duplicating ads to target groups like multiple lookalike audiences, interest-based audiences, retargeting pools, or existing fans.

For a smoother scaling process, tools like AdAmigo.ai can be a game-changer. As a Meta Business Technology Partner, this platform uses AI to analyze your ad account and optimize it for maximum results. You can set performance goals and budget limits, then let the AI handle operations automatically or approve changes manually. Its bulk ad launching feature allows you to test hundreds of variations at once, making it easier to scale successful campaigns efficiently.

As you scale, monitor performance closely while maintaining your core message and repurposing proven creatives. Remember, Meta's ad delivery system takes about 24 hours to adjust performance levels, so avoid making frequent changes, as this can delay optimization. Let campaigns run for at least a day before making further adjustments.

Scaling effectively requires a mix of patience and systematic testing. Keep testing, analyzing, and refining your approach to achieve steady growth. Avoid over-managing your campaigns, as frequent manual changes can disrupt Meta's optimization process.

Conclusion and Key Takeaways

The Benefits of A/B Testing for Meta Ads

A/B testing takes the guesswork out of marketing and replaces it with precise, data-driven decisions that can significantly improve ROI while reducing risks. Donald Ng captures its essence perfectly:

"A/B testing is more than just a marketing tactic - it's a mindset. It's about embracing a culture of continuous improvement, where decisions are based on data rather than hunches, and where small, incremental changes can lead to significant results over time".

The impact of a well-executed split test can be game-changing, with the potential to multiply ROI by up to 10x. This happens because A/B testing helps you pinpoint what truly resonates with your audience while eliminating underperforming elements. By making small, informed adjustments based on real data, you can create scalable results. Beyond individual campaigns, this process provides valuable insights into audience behavior and preferences, shaping your overall marketing strategy.

Perhaps the most critical advantage is the ability to stay ahead in a constantly changing landscape. Consumer behavior evolves, platform algorithms shift, and what worked yesterday might fail tomorrow. Regular testing keeps your strategies fresh and ensures you remain agile. This continuous optimization lays the groundwork for leveraging tools that make testing even more efficient.

How Tools Like AdAmigo.ai Improve Efficiency

AdAmigo.ai simplifies the A/B testing process with automation, saving time and effort while delivering better results. As a Meta Business Technology Partner, it analyzes your ad account and aligns optimizations with your performance goals and budget constraints. One standout feature is its bulk ad launching capability, allowing you to test hundreds of ad variations with a single click. This makes it easy to experiment with multiple landing page elements and audience segments simultaneously.

The platform’s AI works in real-time, monitoring your tests and making adjustments on the fly. This ensures you don’t miss opportunities for improvement while your campaigns are running. Whether you prefer full automation or manual oversight, AdAmigo.ai adapts to your workflow. With its efficiency, you can run more complex tests without adding to your workload, speeding up optimization cycles and improving outcomes.

Your Next Steps

Start with one A/B test focused on a high-impact element. Identify an area where you think performance could improve, then create a variation based on a specific hypothesis about your audience. Set clear metrics for success and let the test run without interference.

From there, build on what you learn. Consistent testing and quick iterations will keep your campaigns sharp and effective. Once you identify a winning variation, use those insights to inform your next test. Over time, this approach compounds, making each campaign more precise and impactful.

To scale your efforts, consider using a tool like AdAmigo.ai. Its bulk testing workflow and AI-driven recommendations can help you go from running one test at a time to managing a comprehensive testing strategy across all your campaigns. With flexible plans based on ad spend, it’s designed to grow alongside your success.

FAQs

What mistakes should I avoid when A/B testing landing pages for Meta Ads?

When running A/B tests on landing pages for Meta Ads, there are a few missteps you’ll want to steer clear of to get results that actually mean something:

Skipping clear goals: Before you even start, decide what you're trying to achieve. Is it more conversions? Higher click-through rates? Without a specific goal, your test might just leave you guessing.

Testing too many things at once: Keep it simple. Focus on one variable - like the headline, CTA, or layout - at a time. If you change too much, you won’t know what’s driving the results.

Ignoring past data: Don’t start from scratch if you don’t have to. Use what you already know from previous campaigns to guide your test. Launching brand-new ads without any historical performance data can lead to skewed or unreliable results.

By keeping these points in check, you’ll set yourself up for A/B tests that deliver insights you can actually use to improve your campaigns.

What landing page element should I test first to see the biggest impact?

How to Choose the First Landing Page Element to Test

Start by clearly defining your goals and identifying problem areas on your landing page. For instance, if your page has a high bounce rate or your call-to-action (CTA) isn't getting enough clicks, focus on testing elements that directly impact those metrics. You could, for example, test changes like tweaking the CTA text or adjusting the button color to see if it drives more engagement.

It's smart to prioritize testing high-impact elements such as headlines, images, and CTAs - these are pivotal in capturing attention and driving conversions. Also, don’t overlook technical aspects like page load times. Slow-loading pages can frustrate users and hurt your results, so using tools to measure performance can be incredibly helpful. By zeroing in on these critical areas, you can get the most out of your A/B tests and make noticeable strides in improving your landing page.

How does AI help streamline A/B testing for Meta Ads?

AI takes the hassle out of A/B testing for Meta Ads by automating the process of experimenting with different ad variations. It evaluates critical elements like audience targeting, visuals, and copy in real time, making it easier for marketers to pinpoint what resonates most effectively.

With AI, you can boost click-through rates (CTR), conversions, and return on ad spend (ROAS) with greater efficiency. This means less guesswork, faster decision-making, and optimized campaign performance overall.